This type of neural network uses a reversed CNN model process that finds lost signals or features previously considered irrelevant to the CNN system’s operations. Using different neural network paths, ANN types are distinguished by how the data moves from input to output mode. Convolutional neural networks (CNNs) are similar to feedforward networks, but they’re usually utilized for image recognition, pattern recognition, and/or computer vision. These networks harness principles from linear algebra, particularly matrix multiplication, to identify patterns within an image.

Machine learning and deep learning models are capable of different types of learning as well, which are usually categorized as supervised learning, unsupervised learning, and reinforcement learning. Supervised learning utilizes labeled datasets to categorize or make predictions; this requires some kind of human intervention to label input data correctly. In contrast, unsupervised learning doesn’t require labeled datasets, and instead, it detects patterns in the data, clustering them by any distinguishing characteristics. Reinforcement learning is a process in which a model learns to become more accurate for performing an action in an environment based on feedback in order to maximize the reward. Also referred to as artificial neural networks (ANNs) or deep neural networks, neural networks represent a type of deep learning technology that’s classified under the broader field of artificial intelligence (AI). Neural networks are sometimes called artificial neural networks (ANN) to distinguish them from organic neural networks.

Convolutional Neural Networks

A data scientist manually determines the set of relevant features that the software must analyze. This limits the software’s ability, which makes it tedious to create and manage. Neural network training is the process of teaching a neural network to perform a task. Neural networks learn by initially processing several large sets of labeled or unlabeled data.

Neural networks, on the other hand, originated from efforts to model information processing in biological systems through the framework of connectionism. Unlike the von Neumann model, connectionist computing does not separate memory and processing. More specifically, the actual component of the neural network that is modified is the weights of each neuron at its synapse that communicate to the next layer of the network. Neural networks are trained using a cost function, which is an equation used to measure the error contained in a network’s prediction. As you might imagine, training neural networks falls into the category of soft-coding. When visualizing a neutral network, we generally draw lines from the previous layer to the current layer whenever the preceding neuron has a weight above 0 in the weighted sum formula for the current neuron.

Training A Neural Network Using A Cost Function

In the hidden layer, each neuron receives input from the previous layer neurons, computes the weighted sum, and sends it to the neurons in the next layer. Deep neural networks consist of multiple layers of interconnected nodes, each building upon the previous layer to refine and optimize the prediction or categorization. This progression of computations through the network is called forward propagation.

Inputs that contribute to getting the right answers are weighted higher. Deep Learning and neural networks tend to be used interchangeably in conversation, which can be confusing. As a result, it’s worth noting that the “deep” in deep learning is just referring to the depth of layers in a neural network. A neural network that consists of more than three layers—which would be inclusive of the inputs and the output—can be considered a deep learning algorithm.

What are the types of neural networks?

These include gradient-based training, fuzzy logic, genetic algorithms and Bayesian methods. They might be given some basic rules about object relationships in the data being modeled. Artificial neural networks are vital to creating AI and deep learning algorithms. For example, you can gain skills in developing, training, and building neural networks.

Training consists of providing input and telling the network what the output should be. For example, to build a network that identifies the faces of actors, the initial training might be a series of pictures, including actors, non-actors, masks, statues and animal faces. Each input is accompanied by matching identification, such as actors’ names or “not actor” or “not human” information. Providing the answers allows the model to adjust its internal weightings to do its job better. ANNs use a “weight,” which is the strength of the connection between nodes in the network.

The Rectifier Function

The multilayer perceptron is a universal function approximator, as proven by the universal approximation theorem. However, the proof is not constructive regarding the number of neurons required, the network topology, the weights and the learning parameters. In applications such as playing video games, an actor takes a string of actions, receiving a generally unpredictable response from the environment after each one.

Neural networks are widely used in a variety of applications, including image recognition, predictive modeling and natural language processing (NLP). Examples of significant commercial applications since 2000 include handwriting recognition for check processing, speech-to-text transcription, oil exploration how do neural networks work data analysis, weather prediction and facial recognition. Populations of interconnected neurons that are smaller than neural networks are called neural circuits. Very large interconnected networks are called large scale brain networks, and many of these together form brains and nervous systems.

To model a nonlinear problem, we can directly introduce a nonlinearity. For a neural network to learn, there has to be an element of feedback involved—just as children learn by being told what they’re doing right or wrong. Think back to when you first learned to play a game like ten-pin bowling. As you picked up the heavy ball and rolled it down the alley, your brain watched how quickly the ball moved and the line it followed, and noted how close you came to knocking down the skittles. Next time it was your turn, you remembered what you’d done wrong before, modified your movements accordingly, and hopefully threw the ball a bit better.

- Multilayer Perceptron (MLP), Convolutional Neural Network (CNN) and Recurrent Neural Networks (RNN) are used for weather forecasting.

- Artificial neural networks were originally used to model biological neural networks starting in the 1930s under the approach of connectionism.

- High performance graphical processing units (GPUs) are ideal because they can handle a large volume of calculations in multiple cores with copious memory available.

- As CNN is used in image processing, the medical imaging data retrieved from aforementioned tests is analyzed and assessed based on neural network models.

- Deep learning algorithms can determine which features (e.g. ears) are most important to distinguish each animal from another.

- Each blue circle represents an input feature, and the green circle represents

the weighted sum of the inputs.

The network might or might not have hidden node layers, making their functioning more interpretable. Supervised neural networks that use a mean squared error (MSE) cost function can use formal statistical methods to determine the confidence of the trained model. This value can then be used to calculate the confidence interval of network output, assuming a normal distribution. A confidence analysis made this way is statistically valid as long as the output probability distribution stays the same and the network is not modified. In the majority of neural networks, units are interconnected from one layer to another.

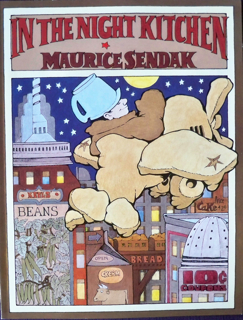

Introduction to Convolution Neural Network

Biological brains use both shallow and deep circuits as reported by brain anatomy,[225] displaying a wide variety of invariance. Weng[226] argued that the brain self-wires largely according to signal statistics and therefore, a serial cascade cannot catch all major statistical dependencies. Ciresan and colleagues built the first pattern recognizers to achieve human-competitive/superhuman performance[98] on benchmarks such as traffic sign recognition (IJCNN 2012). Here are two instances of how you might identify cats within a data set using soft-coding and hard-coding techniques. It leaves room for the program to understand what is happening in the data set.